Dynamic Stereoscopic 3D Parameters

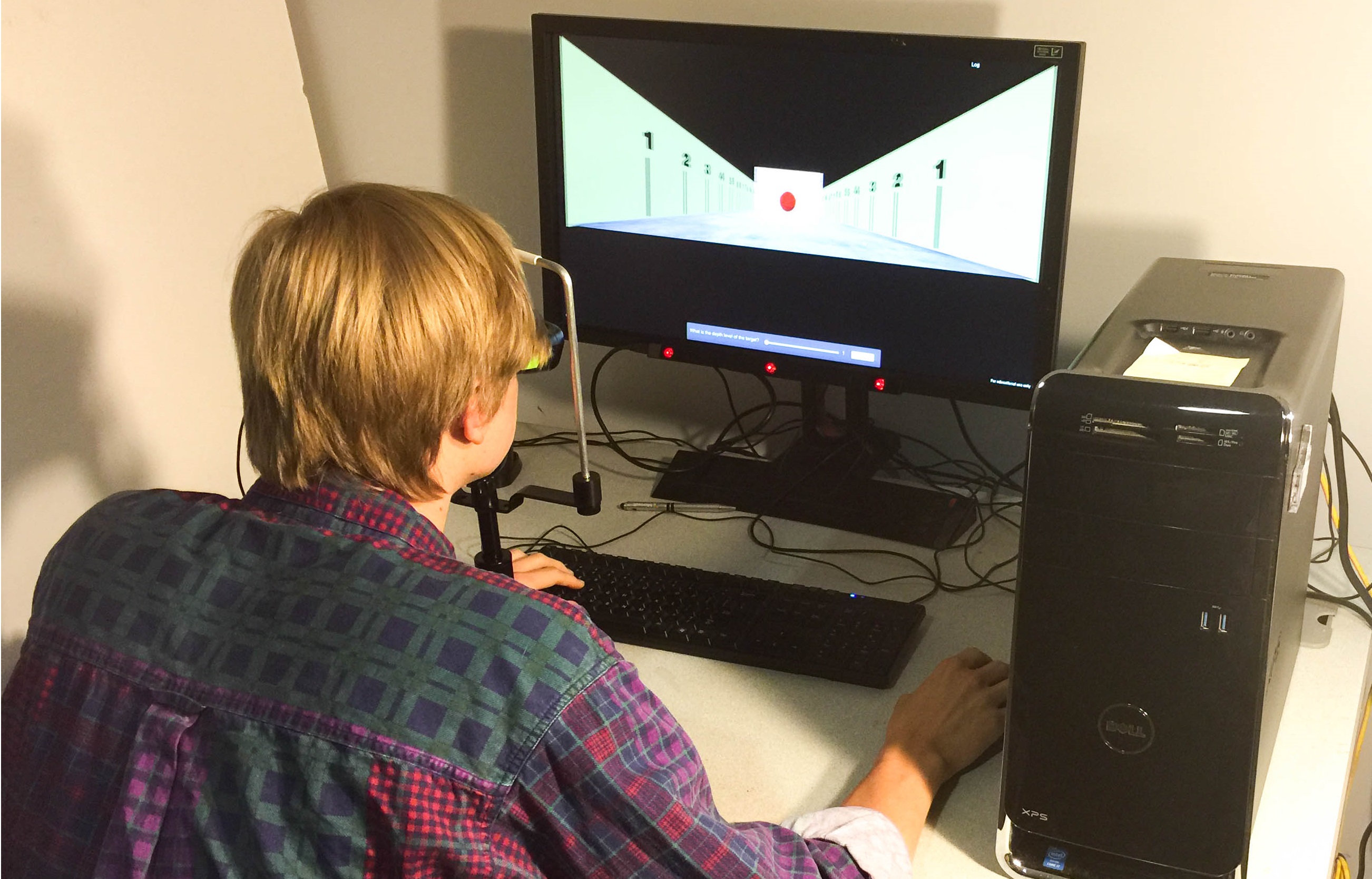

Most modern stereoscopic 3D applications (e.g. video games) use optimal (but fixed) stereoscopic 3D parameters (separation and convergence) to render the scene on a 3D display. But, keeping these parameters fixed during usage does not provide an optimal experience since it can reduce the amount of depth perception possible in some applications which have large variability in object distances. We developed a few stereoscopic rendering techniques which actively vary the stereo parameters based on the scene content and the gaze direction of the user. Our results indicate that variable stereo parameters provide enhanced depth discrimination compared to static parameters and were preferred by our participants over the traditional fixed parameter approach.

Publications

- Kulshreshth, A., and LaViola Jr., J.J., "Dynamic Stereoscopic 3D Parameter Adjustment for Enhanced Depth Discrimination” — Proceedings of the ACM CHI Conference on Human Factors in Computing Systems (CHI 2016), 177-187, May 2016. (SIGCHI Best of CHI Honorable Mention Award)

- Kulshreshth, A., and LaViola Jr., J.J., "Enhanced Depth Discrimination Using Dynamic Stereoscopic 3D Parameters” — Proceedings of the ACM Conference Extended Abstracts on Human Factors in Computing Systems (CHI 2015), 1615-1620, April 2015.

Exploring 3D User Interface Technologies for Video Games

This project explores how the gaming experience is effected when several 3D user interface technologies are used simultaneously. We custom designed an air-combat game integrating several 3DUI technologies (stereoscopic 3D, head tracking, and finger-count gestures) and studied the combined effect of these technologies on the gaming experience. Our game design was based on existing design principles for optimizing the usage of these technologies in isolation. Additionally, to enhance depth perception and minimize visual discomfort, the game dynamically optimizes stereoscopic 3D parameters (convergence and separation) based on the user's look direction.

We conducted a within subjects experiment where we examined performance data and qualitative data on users perception of the game. Our quantitative results indicate that participants performed significantly better when all the 3DUI technologies (stereoscopic 3D, head-tracking and finger-count gestures) were available simultaneously with head tracking as a dominant factor. Qualitatively, participants felt an enhanced sense of engagement when these technologies are present.

Publications

- Kulshreshth, A., and LaViola Jr., J.J., "Exploring 3D user interface technologies for improving the gaming experience” — Proceedings of the ACM CHI Conference on Human Factors in Computing Systems (CHI 2015), 125-134, April 2015.

Finger-Based 3D Gesture Menu Selection

Counting using one's fingers is a potentially intuitive way to enumerate a list of items and lends itself naturally to gesture-based menu systems. We conducted a comprehensive study on Finger-Count menu to investigate its usefulness as a viable option for 3D menu selection task. Our study compares 3D gesture-based finger counting (Finger Count menus) with 3D Marking menus and with two gesture-based menu selection techniques (Hand-n-Hold, Thumbs-Up) derived from existing motion-controlled video game menu selection strategies.

We examined selection time, selection accuracy and user preference for all techniques. We also examined the impact of different spatial layouts for menu items and different menu depths. Our results indicate that Finger-Count menus are significantly faster than the other menu techniques we tested and are the most liked by participants. Additionally, we found that while Finger-Count menus and 3D Marking menus have similar selection accuracy, Finger-Count menu are almost twice as fast compared to 3D Marking menus.

Featured on Discovery News

Publications

- Kulshreshth, A., and LaViola Jr., J.J., "Exploring the usefulness of Finger-Based 3D Gesture Menu Selection” — Proceedings of the ACM CHI Conference on Human Factors in Computing Systems (CHI 2014), 1093-1102, April 2014. (SIGCHI Best of CHI Honorable Mention Award)

Evaluating Benefits of Head Tracking in Video Games

The main focus of this project is to investigates user performance benefits of using head tracking in modern video games. We explored four different carefully chosen commercial games with tasks which can potentially benefit from head tracking. For each game, quantitative and qualitative measures were taken to determine if users performed better and learned faster in the experimental group (with head tracking) than in the control group (without head tracking). Our results indicate that head tracking provided a significant performance benefit for experts in two of the games tested. In addition, our results indicate that head tracking is more enjoyable for slow paced video games and it potentially hurts performance in fast paced modern video games.

Publications

- Kulshreshth, A., and LaViola Jr., J.J., "Evaluating performance benefits of head tracking in modern video games” — Proceedings of the ACM Symposium on Spatial User Interaction (SUI 2013), 53-60, July 2013.

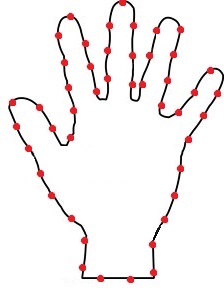

Finger Tracking and Gesture Recognition

Hand gestures are intuitive ways to interact with a variety of user interfaces. We developed a real-time finger tracking technique using the Microsoft Kinect as an input device and compared its results with an existing technique that uses the K-curvature algorithm. Our technique calculates feature vectors based on Fourier descriptors of equidistant points chosen on the silhouette of the detected hand and uses template matching to find the best match. Our results show that our technique performed as well as an existing k-curvature algorithm based finger detection technique.

Publications

- Kulshreshth, A., Zorn, C., and LaViola Jr., J.J., "Real-time markerless Kinect based finger tracking and gesture recognition for HCI” — Proceesings of the IEEE Symposium on 3D User Interfaces, 187-188, March 2013

Evaluating 3D Stereo in Motion Enabled Games

The main focus of this project is to evaluate the effects of 3D stereo in motion controlled games. We conducted a study investigating whether user performance is enhanced when using 3D stereo over a traditional 2D monitor coupled with a 3D spatial interaction device in modern 3D stereo games. We made use of the PlayStation 3 game console, the PlayStation Move controller, and five carefully chosen game titles as a representative sample of modern motion enabled games.

Publications

- Kulshreshth, A., Schild, J., and LaViola Jr., J.J., "Evaluating user performance in 3D stereo and motion enabled video games” — Proceedings of the ACM International Conference on the Foundations of Digital Games (FDG 2012), 33-40, May 2012.

PaintToMove Application

Physical therapy is a necessary but often frustrating process for many people. It requires constant repeated exercises which are not only boring over time but can also be painful. For these reasons, many physical therapy patients have trouble motivating themselves to complete treatment. We are developing software which encourages them by occupying their minds with an engaging experience, distracting them from these negative influences and can also be adjusted based on the patient's stage of rehabilitation.

Paint to Move is an application in which users can paint virtually on a 2D canvas by using a Sony Move controller as a paint brush. The colored tip of the Move controller indicate the color of the paint, changing as a user dips the Move controller into different colored virtual paint cups. Work on an earlier version of this proptotype suggests that users tend to focus on the task rather than on the reptitive arm motions they need to make, which is desirable in physical therapy applications. We believe the Sony Move controller is an ideal input device for our application because of its accurate 6DOF tracking and low cost.

Featured in Move.Me application release video

- PlayStation Blog Link

- Look at the second video on the right

Seamless Stereoscopic 3D Rendering on Multiwall Display

This project involves displaying stereoscopic images on a big wall display consisting of three rear projected screens. Each screen is controlled by a computer and displays stereoscopic images by using two projectors (one for each eye). So there are three slave computers and one master computer controlling each slave. We depeloped a software solution to render seamless stereoscopic images on this big wall display by dividing the scene to be rendered into parts and providing each slave machine the necessary information to render its part of the whole rendered image.

Polysrystal Orientation Maps from 3D X-ray Diffraction Data

The aim was to reconstruct the 2D orientation map of a polycrystals (like metals) from its X-ray diffraction projection data. Knowledge of orientation map is of interest to material scientists since the physical and chemical properties of a polycrystal are related to its orientation map.

Publications

- Kulshreshth, A. , Alpers, A., Herman, G.T., Knudsen, E., Rodek, L. and Poulsen, H.F. , “A greedy method for obtaining polycrystal orientation maps from three-dimensional X-ray diffraction data”— Journal of Inverse Problems and Imaging,Vol3 No.1 , 69-85, 2008.